7.11.19 – CEPro – Bjorn Jensen

Router-on-a-stick (inter-VLAN) routing scheme can be very beneficial for security and network troubleshooting, so why do so many home-tech integrators categorically dismiss it for standard Layer 3 switches?

Networking expert Bjørn Jensen of WhyReboot is tired of hearing integrators rail on the “router-on-a-stick” scheme for home networks. “I’ve heard grumbles in our industry about how the router-on-a-stick method should NEVER be used because performance is better on the switch,” he tells CE Pro.

The complainers might not recognize the security benefits of having a smart device like this “acting as a traffic cop between networks,” he says. “There is a balance one must find between performance, security, and price. If you need more performance you don’t have to do that on a switch.”

Here he explains the tradeoffs and virtues of router-on-a-stick. – editor

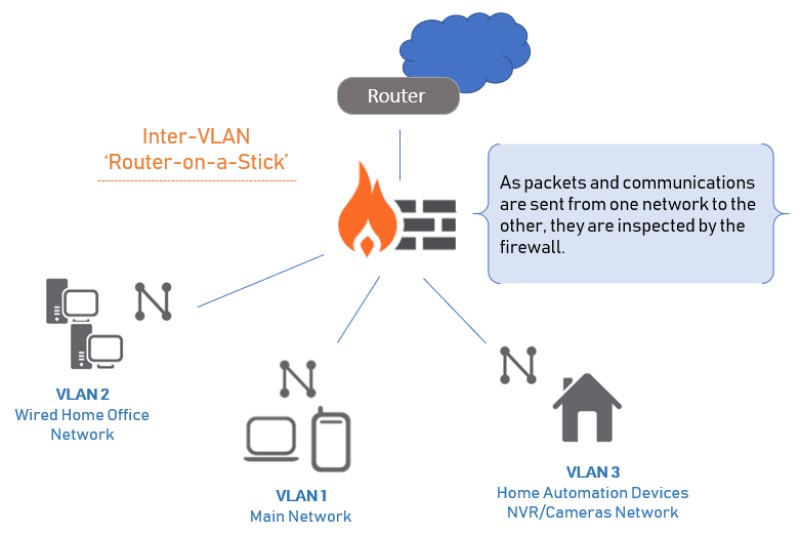

ROUTER-ON-A-STICK, also known as a “one-armed router” is a method for running multiple VLANs over a single connection in order to provide inter-VLAN routing without the need of a Layer 3 switch.

Essentially the router connects to a core switch with a single interface and acts as the relay point between networks. If a device on one VLAN wants to talk to a device on another VLAN, that traffic must leave the switch and pass through the router which “routes” the traffic back to the switch, over the same interface, to the other VLAN.

This can be a problem because it can create a bottleneck which can overload the one interface if it’s not fast enough. In larger enterprise environments this single interface is often bonded with one or more other interfaces in a LAG (Link Aggregation Group) which allows the use of multiple interfaces to avoid a bottleneck.

Naturally, the easiest way to solve this bottleneck problem on smaller networks is to perform the inter-VLAN routing on a Layer 3 switch instead. That’s because switches have much faster backplane speeds and are much better at forwarding frames (Layer 2) – in this case packets (Layer 3) – to ports on the same switch that reside on another network segment.

This is the reason why many people assume that the “router on a stick” method is inferior, and to the uninitiated it makes sense that it would be.

The Many Benefits of Router-on-aStick

What many people fail to realize is that there are some pretty huge benefits to using a router, or more precisely a firewall, to be the gateway between networks.

To understand the benefits of putting a firewall in-between segments you must understand something that many people overlook: Most internal traffic never touches the router interface unless it’s going out to the internet.

In other words, any firewall access rules, traffic-shaping, anti-malware scanning, etc., cannot be used on the internal network UNLESS it hits a router interface.

This is the reason IP cameras can communicate with an NVR without even needing a gateway address: You can physically remove the router and they’ll still be able to communicate with each other. Sure, they won’t be accessible from the Internet, but they can still communicate with one another.

If you had a firewall in place there is nothing you could do with that firewall to keep them from communicating. There is essentially zero security that a firewall would provide in this scenario. The traffic must hit the router interface in order for the firewall to do its thing.

Now, if you want to be able to use the various features of a full-fledged firewall, then the router-on-a-stick method starts to look a whole lot better. Some of these features include:

- shaping traffic between VLANs

- segmenting traffic with more granularity than simple access rules that most switches can provide

- scanning traffic between networks for malware or spyware, or any other

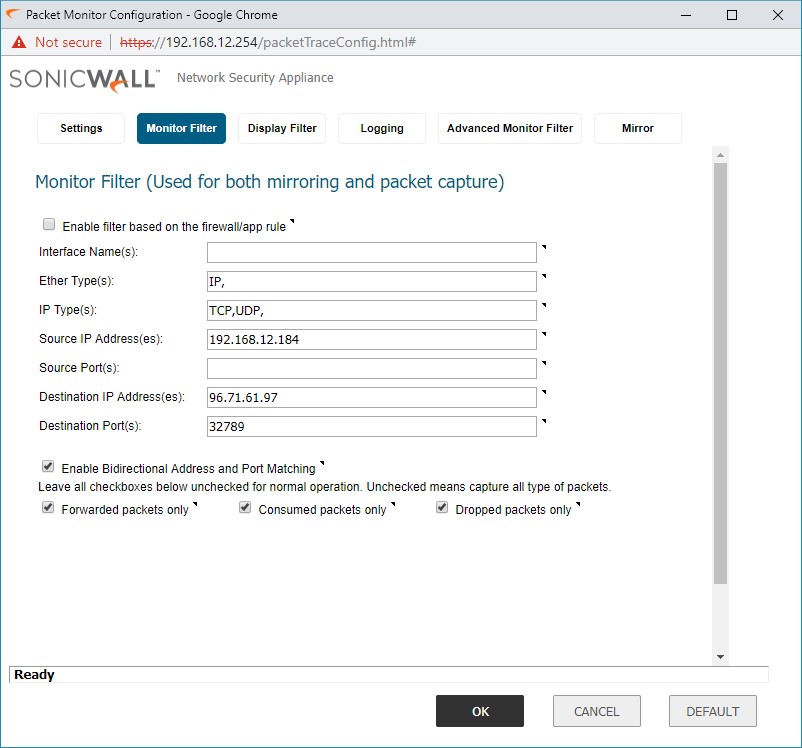

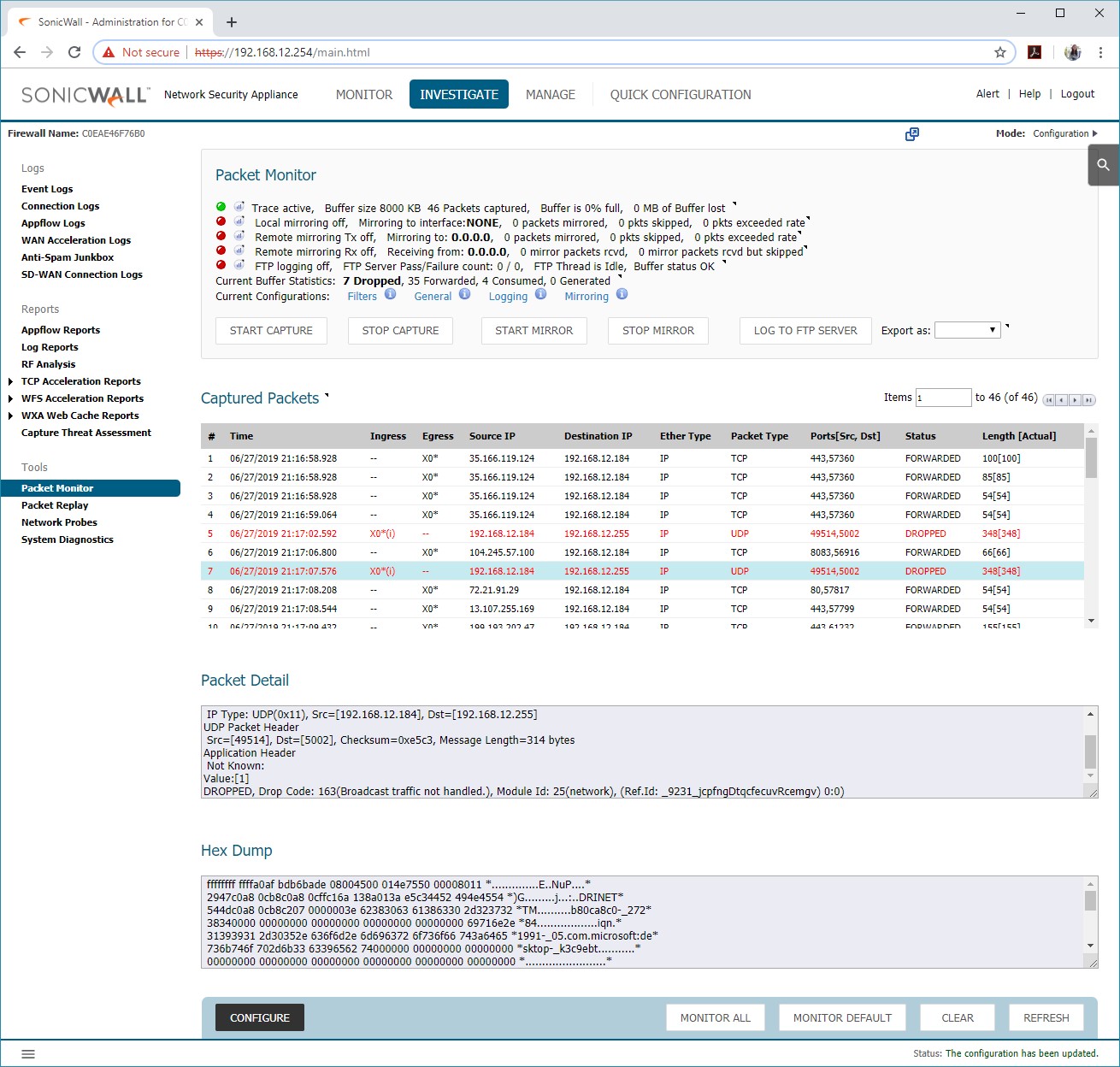

- packet monitoring

In fact, putting all of the security and traffic shaping benefits aside, packet monitoring is something that has become a go-to troubleshooting method for so many of our networks. It helps us prove out that the network is doing what it’s supposed to, and if there is an issue with a specific device on the network.

Being able to provide this information to manufacturers is essential in getting them to stop the finger-pointing and take a second look at their own product. Or it can help us diagnose possible errors in our configuration that may cause traffic to go somewhere it wasn’t intended.

Not that we don’t do packet monitoring on switches as well – we do. Without a netflow or s-flow collector onsite we must have a technician physically plug a laptop into a switch port while mirroring the ports we want to monitor onto a laptop and collect information with Wireshark.

This is problematic for many reasons, the biggest of which is it requires a truck roll. With the ability to dial in remotely to a firewall and monitor packets that cross our router interface easily, this makes this kind of troubleshooting a snap.

What’s better is that we can fine-tune it to only display dropped packets, or choose what ports and protocols to look at, or find source and/or destination IP addresses, which helps to improve the time to resolution.

In a perfect world we would always be able to use the router-on-a-stick method to make use of these benefits.

Then Again … When VLANs Get Overwhelmed

There are, of course, times that there will be VLANs that communicate a lot of information between each other and so ends our “perfect world.”

Usually you want to try and limit this as much as possible and that’s where we apply the 80/20 rule: 80% of network traffic will stay in its own VLAN and never need to communicate to another, leaving only 20% that must traverse a router interface whether on a layer 3 switch or on a router/firewall itself.

In my experience for our industry it’s much less than 20%, especially if the network is designed properly.

Keep in mind this rule isn’t necessarily accurate in large enterprise networks today for a few reasons, one of them being that most of their resources are now in the cloud. For home networks, however, the 80/20 rule is pretty accurate.

In our industry most of the traffic you’ll see traversing a router interface would be from control signals.

For example, a Crestron processor on one VLAN may talk to an NVR on another. This is minor control traffic that certainly wouldn’t create a bottleneck.

In most systems the largest amount of data you’d see crossing a VLAN would likely be a homeowner accessing their camera system from their home VLAN, or even a touchpanel on a control VLAN serving up cameras from the surveillance VLAN. It is, in fact, generally a good practice to keep the security VLAN separate from control.

These are the things you need to be aware of and make sure that you have enough speed on your firewall so as not to create a bottleneck.

Bottleneck Antidotes

If you do run into this issue where you find too much communication between VLANs and your router interface can’t keep up, there’s good news.

Generally, you’ll find that whatever needs constant and high-bandwidth communication between VLANs are within the same security realm. In these situations, you will most likely want to forego the benefits of putting a dedicated router in-between and use the faster switch interface to route the traffic instead.

The rest of the VLANs can still move through the router and if for some reason these high-bandwidth VLANs need to communicate to something else, like a control system, they can still pass that information through the router interface to gain the benefits I mentioned above.

Or, if you designed the network properly the first time, you’ve prepared for this increase in traffic by sizing the right firewall.

Most networks in our industry don’t require it but there are some of us fortunate enough to work on some pretty large projects and inevitably you aren’t going to be able to push even control traffic over a single gigabit interface.

Luckily the network manufacturers have responded to this need with multi-gig interfaces so that if you require more bandwidth, just get a firewall that can handle the additional load and make sure that you have a matching switch interface ready to accept that multi-gig connection.

There is always the delicate balance between price, performance, and protection so just like any other aspect of our industry you must weigh the pros and cons.

Sometimes you can go with the bigger firewall, sometimes you may have to design it so that resource-intensive inter-VLAN traffic will need to go through the switch itself.

A careful, calculated design is paramount, and knowing all of your options, and all of the capabilities of your gear, can position you for success in the long run.

Setting the Rules

![]()

Coming from a background in large commercial computer systems administration and engineering, Bjørn Jensen found a home with a Platinum Crestron Dealer as the IT Director. While there he saw the growing need for more complex, managed networks working as the core of any large residential home automation system. Knowing that Ethernet networks would become ubiquitous in our industry, Bjørn decided to form a company dedicated to providing commercial grade plug and play networks for ESC’s who didn’t have the time or the knowledge to properly implement what is needed in some of the larger, more complex environments. Since then he’s become entrenched in the CE community by becoming a member of the inaugural CE Pro Blog Mob and writing and instructing courses for CEDIA’s new certification, the Residential Networking Specialist. For more information see the following: About WhyReboot. Have a suggestion or a topic you want to read more about? Email Bjørn at Bjorn@whyreboot.com