4.24.20 – SIW –

Genetec president explains why the security industry need to pump the brakes on so-called AI solutions during virtual tradeshow

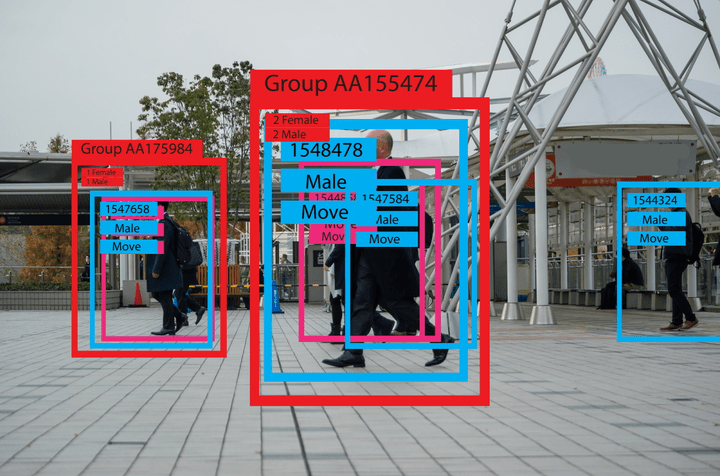

Over the past several years, the security industry has been inundated with products espousing the benefits of artificial intelligence (AI). As many are quick to point out, however; true AI, which is the ability of machines to think and reason on their own without humans in the decision-making loop, is non-existent at the moment and that the analytics products being leveraged across the industry currently are really machine learning solutions that are designed to help with the arduous and somewhat monotonous task of detecting and classifying objects – people, vehicles, animals, etc.

But even these machine learning products are only as good at the human-developed algorithms that drive them. Such was the cautionary messaged shared by Genetec President Pierre Racz during the company’s “Connect’DX” virtual tradeshow that was held earlier this week.

“I’m not knocking the technology, but we need proper engineering and proper engineering requires knowledge of these limits of the technology,” Racz said. “We have to avoid wishful thinking and anthropomorphizing and we have to be open and honest about what these limits are. We do not want to build systems that provide a false sense of security.”

With today’s machine learning solutions, Racz said it is paramount to define the problem you are trying to solve.

“Artificial intelligence does not exist, but real stupidity exists. You mustn’t confuse the appearance of intelligence with actual intelligences,” he added. “Intelligent automation keeps the human in the loop and the human can provide you with the intuition and creativity while the machine does the heavy lifting.”

At Genetec, Racz said the company is using intelligent automation (IA) tools and statistical machine learning but not what much of the market is referring to today as AI. Having been in the software business for 40 years, Racz said he’s already been through five AI “winters” which occurred after the technology appeared on the scene and then quickly fizzled after failing to meet what the Gartner Hype Cycle refers to as the “peak of inflated expectations.”

Problems with AI

One of the telltale signs that this is occurring with a technology is when negative press headlines start to appear about it in publications and this is already happening with regards to AI this go-round. In fact, one of the most prominent AI technology’s on the market, IBM’s Watson supercomputer, which famously beat two of the most successful Jeopardy champions of all time, was recently called “a piece of shit” by a doctor at a Florida hospital after recommending the wrong treatment for cancer patients.

“(IBM) thought they could apply this to the medical system and Watson learned quickly how to scan (medical) articles but what Watson didn’t learn was how to read the articles the way that doctors do,” Racz explained. “The information that physicians extract is more about therapy and there are small details and footnotes and they extract more than the major point of the study. Watson, who’s reasoning is purely statistical-based, only looks at the first order of the paper but doctors don’t work that way.”

Racz also pointed to the problems currently being experienced with regards to autonomous vehicles and their inability to respond appropriately when they encounter atypical data during a drive as another prominent example of the learning pains of AI. In particular, he said a recent crash in Arizona involving a self-driving Uber vehicle that struck and killed a pedestrian walking her bicycle across the road is a prime example of this.

“The biggest failure was that the AI-engine driving that car had only been trained on humans crossing the road at a crosswalk. To see a human cross the road where there was no crosswalk lowered its confidence score,” he said. “It was also trained to see humans on a bicycle but a human walking beside the bicycle was something it wasn’t familiar with and so that also confused the AI. And I say AI as a pejorative.”

In fact, Racz said the AI we have today has reasoning power of an earthworm. “The danger is not that AI is going to take over the world and enslave us, the danger is they do exactly what we ask it to,” Racz added.

Real World Implications for Security

Racz also presented a series of photos in which a small distortion was introduced to vastly change the confidence of an AI algorithm in how it classified the object in a photo. In one case, a lifeboat that was identified with 89% accuracy confidence was subsequently classified as a Scottish Terrier with more than a 99% accuracy confidence after this distortion was inserted in the image.

“Think about this: If you’re using facial recognition as a single factor for access control, I could walk in front of your access control camera with my smartphone generating an adversarial image and I could get the system to recognize me as anybody in your database,” he said. “Of course, this is not a cheap attack. It would most likely require me to break into your system and find out what is your training set, what is the algorithm you’re using but this is what you have to do; you have to decide if you’re going to use such technology as a single factor for access control. What is the impact of failure?”

Though many organizations are now buying into intelligent video analysis and other so-called AI security tools, Racz cautions that they really need to do their research first.

“A lot of companies have jumped on the bandwagon and most end users don’t really know the limits of the technology and they don’t know if it was actually well-engineered. They don’t know if the training set has bias, so my word of caution is caveat emptor: buyer beware,” Racz added. “You can design these systems and they can look really good in a demonstration during a sales pitch, but you have to make sure you’ve properly engineered for false positives and the false negatives because there will be those. You also have to make sure there is not unanticipated things in your training set. Remember, the adversarial system only needs to get it right once, you have to get it right every time.”